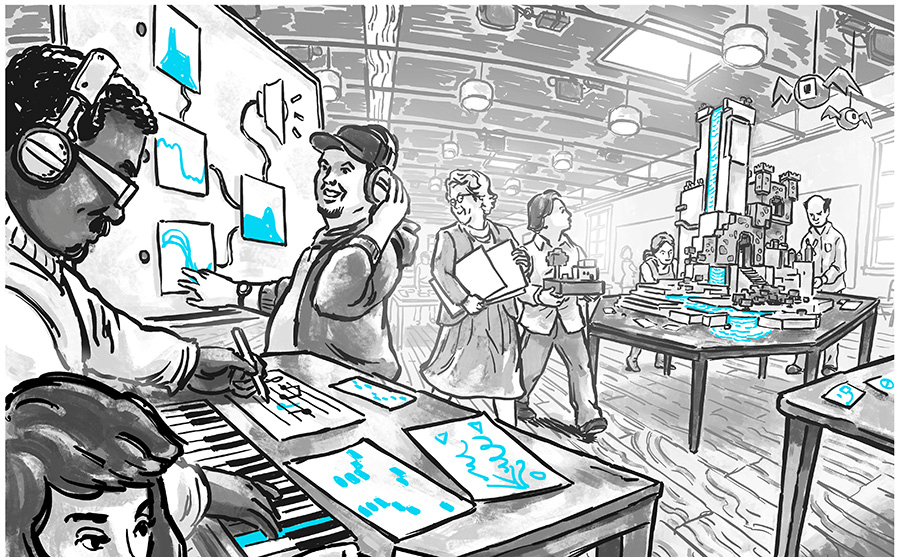

Illustration by David Hellman for Dynamicland

The Future of Software Design and Development

Table of Contents

Preface

The future is not one thing, it's a magnitude of things. And I may have stumbled on a few of them. Some will feel unrelated or irrelevant to others, but when connected, they create a whole, they create a vision of a perhaps future.

The following ideas and thoughts are not of my own invention, I’ve merely extrapolated them from what I’ve already read and seen.

While the article is written linearly, the topics are not. It’s my hope that by the end, the ideas are formulated in your head and you’ll be able to connect the dots.

The Future of Design Tools

The Stagnation of Design Tools

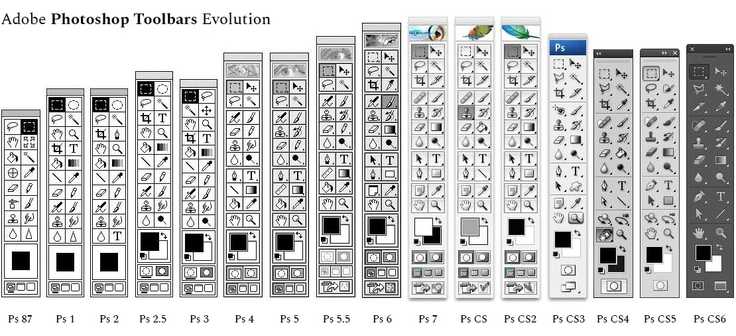

The first digital design tools were created in the 80s, one of them was MacPaint.

As you can see, except for some common features, the current design tools don't look that far off from what we had since.

Back then, there was a bit of magic, they introduced a new way to fundamentally interact with the computer, a new way to express ourselves.

Although we’ve added a lot to our design tools since then, they feel like incremental improvements. We've kept reskinning and repackaging what we already had until some of the magic has gone lost.

The current design tools weren’t built for today's needs, They were built for a static era.

A Brief History of UI Design Tools

Static Design Tools

Designers designed app screens as static images with tools like photoshop.

Developers had to "slice" the images to make the UI.

You couldn't "inspect" the image for fonts, spacing, colors, nothing.

Developers used external tools to extract and measure values from the image.

The design was a dead static asset that was destined to be thrown to the virtual dumpster.

Medium Aware Design Tools

Since then, Design tools have gotten aware of the medium they were designing for, such as Sketch and Adobe XD.

They've got built-in tools to measure spacing, inspect colors and font styles, and even output copy-able code (as in CSS).

The developer had an easier time taking the "still" static asset and turning it into the "real" thing.

WYSIWYG Design Tools

WYSIWYGs design tools like WordPress and Wix allowed non-developers to develop actual sites/apps with "no-code".

While these tools empowered the commons, they've also locked them into their walled gardens.

These design tools were built to be in a closed ecosystem, not to interact with the dangerous outside world.

These tools don't usually work nicely with developers, they don't output the most maintainable code, they're not as open to the development ecosystem, and although some of them are powerful, they are limited in a sense.

Closed WYSIWYGs design tools aren't the way to go, the solution isn't to create your own ecosystem, the solution isn't to give the designer specific boundaries to play with and not to go beyond them.

There must be something else, a place where designers and developers can exist in unity.

Component-Based Design Tools

Design tools like Figma have gotten "smarter", they aligned with the same paradigms as developers, which are components and variables.

You can design components that are reusable, are passed dynamic data, and can have multiple variants and states.

And you can store colors, spacing scales, fonts, and other values in reusable design tokens.

This shift in design tools made designers and developers think alike, they both have the same mental model of what the design should be and behave.

But the developer still needed to take that "still" static asset and reimplement the intentions of the designer into actual useable production-ready components.

And here is where things get a bit fuzzy.

Because what fundamentally happened here, is the design was implemented twice.

The intentions and the mental model of the designer were clear in the design tool, why does it need to be "translated" by a developer into code?

This is just plain inefficient.

Design to Code Tools

There are tools like Anima that tried tackling the inefficiency by "auto" translating design to code.

They translate the design by giving you a way to export components or design tokens as code.

This initially sounds like a good idea, but it's based on a fundamental issue.

It treats design and development as different artifacts, where design is something that "needs" to be translated, and not be the actual thing itself.

The problem is treating design and development as different mediums.

Code-Based Design Tools

Now we're seeing design tools like Modulz, Relate, and Builder.io that don't require designs to be "translated" to code because the design is the actual code, from the ground up the tool is built to design using actual product-ready code.

It's giving the designer the same capabilities as the UI developer, just with a different interface, an interface that is fundamentally more suitable for the task at hand.

Designs were never meant to be written, we are visual creatures and design is inherently visual.

Creating designs with text is creating with the wrong level of abstraction.

3D and Interactive Design Tools

Our sites and apps were mostly static and 2D, and the design tools were optimized for that.

But with the move to 3D and interactivity, design tools are being built from the ground-up to output interactive animation and 3D experiences that are production-ready with tools like Rive and Spline.

The Death of Static Design Tools

There are powerful design tools out there, but they're built on shaky grounds.

Any design tool that isn't built from the ground up to make the “real” thing and rather consider code as an afterthought will eventually shrink until it gets put on the walls of the digital design museum.

Design and development are fundamentally the same things, they're both about creation, the first in the aesthetic & form sense while the other is in the technical & logical sense.

“A "user interface" is simply one type of dynamic picture. I spent a few years hanging around various UI design groups at Apple, and I met brilliant designers, and these brilliant designers could not make real things. They could only suggest. They would draw mockups in Photoshop, maybe animate them in Keynote, maybe add simple interactivity in Director or Quartz Composer. But the designers could not produce anything that they could ship as-is. Instead, they were dependent on engineers to translate their ideas into lines of text. Even at Apple, a designer aristocracy like no other, there was always a subtle undercurrent of helplessness, and the timidity and hesitation that come from not being self-reliant.

It's fashionable to rationalize this helplessness with talk of "complementary skillsets" and other such bullshit. But the truth is: An author can write a book. A musician can compose a song, an animator can compose a short, a painter can compose a painting. But most dynamic artists cannot realize their own creations, and this breaks my heart.

”

The Convergence of Design and Development

“A designer can draw a series of mockups, snapshots of how the graphic should look for various data sets, and present these to an engineer along with a verbal description of what they mean. The engineer, who is skilled in manipulating textual abstractions, then implements the behavior with a programming language. This results in ridiculously large feedback loops—seeing the effect of a change might take a day instead of a second. It involves coordination and communication between at least two people, and requires that the designer justify herself—she must convince the engineer and possibly layers of management that each change is worth the engineer’s time. This is no environment for creative exploration.

”

I believe there is a point where design and development converge.

The point where the designer says they're designing and the developer says they're developing, while they're essentially doing the same thing.

Designers will not hand over anything to developers because there is nothing to hand over, there is no design to code pipeline, there is only a playground where people collaborate.

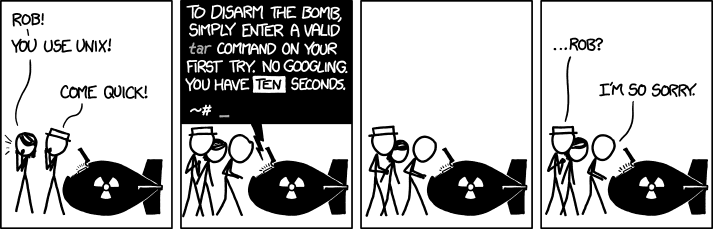

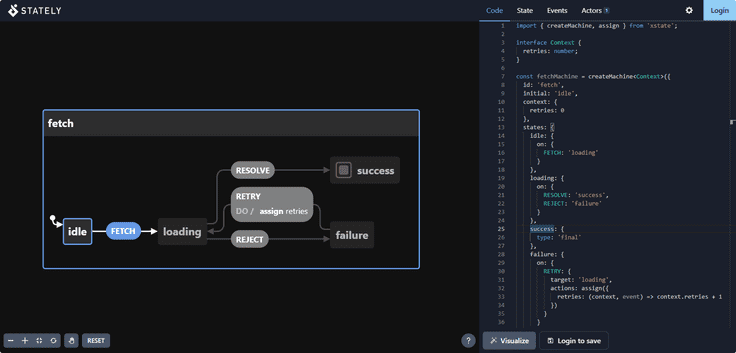

Designers, as like developers, will have their work version-controlled, they’ll make pull requests, install 3rd party components and libraries right from the design tool, have linters like IDEs, their designs will be auto-documented inside the code (Storybook & Playroom), create stateful components with tools like Stately, and they’ll be able to push straight to production.

And in order for the point to converge, we'll need to change how we fundamentally develop software.

“Blur the lines between designing, building, and running the system; ideally there is no distinction between these modes. Minimize perceptible waiting times (like there are today for building or compiling). The system runs continuously and is modified directly — like shaping a digital material in real-time.

”

The Dark Age Of Programming

You would think we are on the pinnacle of knowledge, that we've mastered the arts of software.

But frankly...

We don't know what programming is

The people who programmed with punch cards and binary thought they know what they were doing.

The people who programmed in assembly thought they know what they're doing.

The people who programmed in low-level programming languages thought they know what they're doing.

The people who now program in high-level programming languages think they know what they're doing.

We never know what we're doing.

“Everything that came before you was too much boilerplate. Everything that came after you is too much magic.

”

The way we're doing things can’t always be the "best" and "only" way of doing things, we shouldn’t get stuck in dogma.

Instead of looking at the newest frameworks and the incremental upgrades to how we already do things, we should look into re-evaluating how we ought to do things from the ground up.

Drawing With Bricks

Imagine I gave you a paper and told you to write your name, but you'd have to write it with a pen tied to a brick.

I'd imagine after that you'd want to write your name right into my face 😅.

Nonetheless, you'd be frustrated.

Why? Because the brick is damn heavy, your hand is cramping, your precision is that of a monkey, and that 'r' you wrote looks like an impaired 'n' standing on one foot.

Imagine if that was the only way to write?

Imagine Einstein trying to write down his theories until his forearm goes numb then he said "darn this! I'll just go milk goats for a living!"

Imagine the history that would've been lost, for there was no forearm strong enough to record it. Imagine the books, research, stories, ideas, drawings, graphs, letters, maps, notes, and fliers that would've never seen the light of day.

Writing, history, knowledge would be reserved for the nation of the heavy-handed and the firm wristed. The weak-handed would say "we're not meant to be writers, we weren't gifted with a writer's hands."

But the problem wasn't with the people. The problem was in the friction.

The friction to create, write, express. the people were constrained by their tools.

The tools killed your and billions of others’ ideas.

You might be wondering what the heck am I on about, we already have pens and pencils.

But what I'm implying dear reader is that there are people who think they are "bad at math" or "not meant for programming" and I believe our tools have failed them, miserably.

The problem isn't that programming is difficult and math is hard, The problem is in the way we represent them.

We don't think in or in...

sub quick_sort {

my @a = @_;

return @a if @a < 2;

my $p = pop @a;

quick_sort(grep $_ < $p, @a), $p, quick_sort(grep $_ >= $p, @a);

}Some of you have the forearm to decipher the above symbols, and others look at them with fear and dread, running from them on sight.

Humans don't think with static symbols, these are just the abstractions we chose to communicate those concepts to each other or to a machine.

Those are the bricks tied to our pens, the pens of logic and thought.

“The example I like to give is back in the days of Roman numerals, basic multiplication was considered this incredibly technical concept that only official mathematicians could handle,” he continues. “But then once Arabic numerals came around, you could actually do arithmetic on paper, and we found that 7-year-olds can understand multiplication. It’s not that multiplication itself was difficult. It was just that the representation of numbers — the interface — was wrong.

”

Hammer and Nail for The Mind

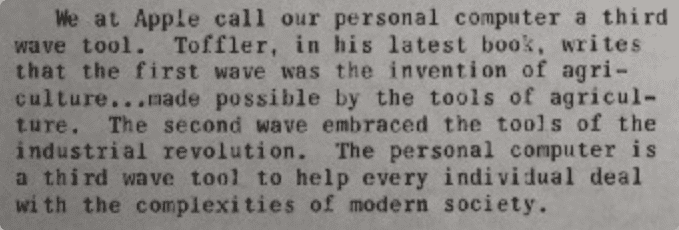

“When we invented the personal computer, we created a new kind of bicycle…a new man-machine partnership…a new generation of entrepreneurs.

”

The mind bicycle is a great invention, it made us go across borders, made us wander in places we wouldn't have imagined wandering in, it augmented the legs of our mind, making it step further than it could go alone.

But with a bike, it's hard to go on roads that weren't meant to be ridden on, the rider can't build their own road, they're obliged to be under the whims of the road builders.

The bike is not a building tool, it's a navigation tool and navigation tools are inherently passive.

What I dream of is a building tool, a tool that lets minds not just consume but create, a hammer and nail for the mind.

The Collapse of Abstractions

Google Services (the ones you "probably" use every day) are built with about 2 billion lines of code.

yes, a whole two billion. And If you turned that into a book, the book will be 36 million pages. If you put that book beside Burj Khalifa, you'll need about 3 and a half more Burj Khalifas for them to be the same height.

Our average reading speed is roughly 2 minutes per page, so to read the whole Google services codebase will take you about 137 years of non-stop reading, so you'll need about a dozen lifetimes before you finish reading!

And that's just reading, imagine trying to understand it all 👀!

Is that normal? for the things we use to be incomprehensible beyond anyone's mind?

You can probably explain each one of Google's services on one sheet of paper, but to explain them to a computer we'd need the equivalent of millions of papers.

This shouldn't be the case.

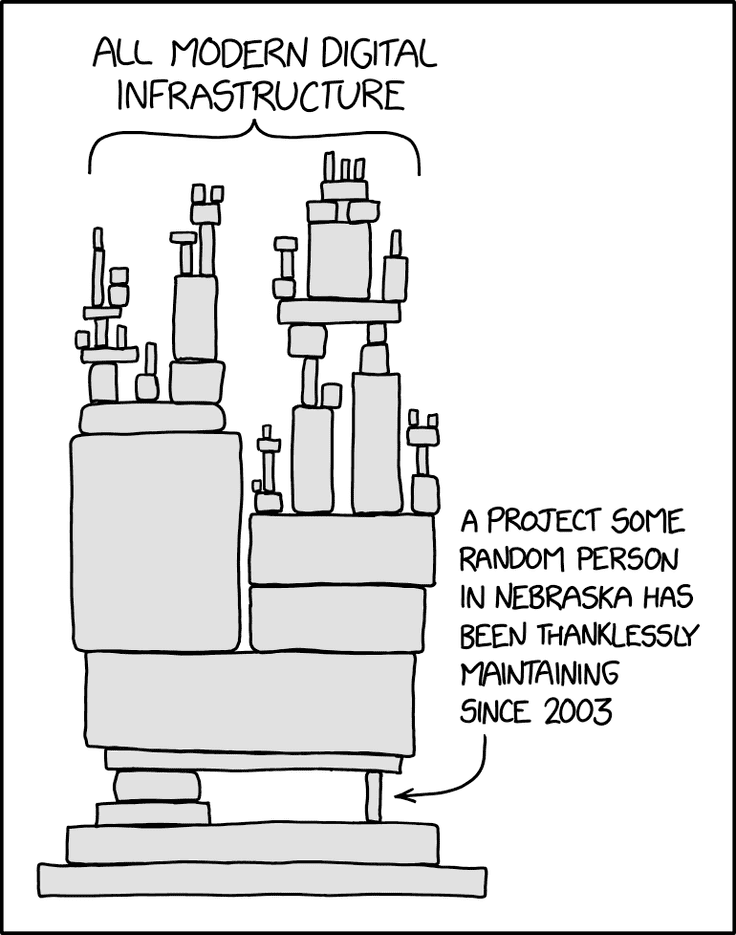

We've built software by throwing sloshes of abstractions with our leaky buckets into our codebase until we could’ve filled the Atlantic.

We've built our software on foundations we cannot understand, hoping that the foundation builders did their due, while the foundation builders themselves question the soil beneath them.

We pray that our software stands, until one day, while we are at the heights of our architectures, looking down at the fog of dependencies, we hear a crack, we're falling from the soar of the skies, our abstractions are collapsing, they cannot withstand, their model of the world does not match reality, we've added complexity beyond maintenance, it can no longer co-exist with the universe.

We see ourselves buried in dirt, looking around at the rubbles from the mountains of infrastructure, "Oh...how will we build all of this again?" and all you see are lost blank pale faces.

Sophistication Made Simple

The Elements Of The Universe

There is currently 118 elements known to man, these simple elements are the ones that shape us and the world around us.

Although the world is full of various complex systems and models, their building blocks are not.

That means in order to build complex systems, you don’t necessarily need more sophisticated tools, but rather you’ll need better (and simpler) abstractions.

What we need need is abstractions that are comprehensible, abstractions where someone can look behind the curtain and understand what's going on, where someone can tinker and change the system into something beyond the scope and intention of the original creators.

Smalltalk

Smalltalk enabled people who aren’t considered programmers like kids and animators to create sophisticated tools using only a few pages of code as if writing an essay. And those few pages are comprehensible that a kid can read it and add or change something that they want.

Smalltalk is a good example of a "human" comprehensible programming language. The future of programming should follow the spirit of Smalltalk.

Dynamic Land

“The computer of the future is not a product, but a place.

”

Dynamic land is a place, a medium where computers were released from their boxes and unleashed to make the place itself a living thing.

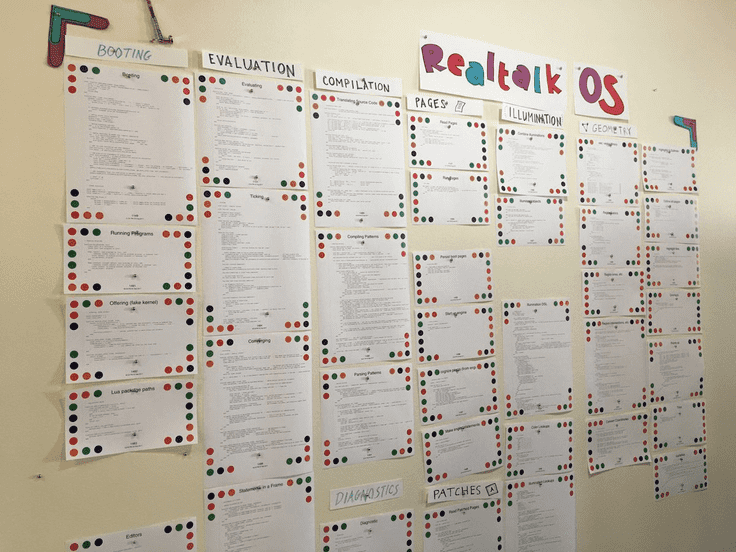

The idea is simple, instead of people manipulating virtual objects on a screen, they interact with real objects that are “dynamic”. There are projectors in the room that read code from objects and runs it (currently it supports a color dotted sheet of paper that has code on it written with “Realtalk”)

And the whole operating system of dynamic land is written on this wall 👇

As you can see, the whole thing can be read, comprehended, and even manipulated by one person.

And it can enable cool things like pinball!

Interactive maps!

Laser socks!

And many more!

The system is quite simple, yet the applications you can do with it are varied and sophisticated.

The Discretion Between Beginner Tools and Professional Tools

There's a great divide between how programming is taught and how it's used professionally.

When kids are taught programming, you might teach them via a visual programming language like Scratch.

Or if someone is trying to learn how to build websites, maybe they’ll start off with a no-code tool like Webflow.

But when they want to jump deeper into programming, the lights get shut, the rug under their feet is pulled, they're scared in the dark, "Forget what you know, throw away what's in your hands and take this, this is what real programmers use!"

And they're stuck, "Do I really need to relearn what I just barely knew?"

So they either find comfort in the thing they just knew, or they dive into the unknown.

But why do we need a transition between beginner and professional tools?

Why can't they be the same tool?

Both the toddler and the artist use the same pencil and paper, one draws a rabbit and the other draws the lands of starry nights.

Both the amateur and the rockstar use the same guitar with the same six strings, one plays it in the basement and the other plays it in front of nations.

Both the hobbyist and the expert use the same camera, one posts their photos on their feed and the other is featured on billboards and magazines.

They all use the same tool, the complexity and talent scales based on the individual, but the tools remain the same.

There doesn’t need to be a division in tools, there is no need for a transition between modes.

You might be wondering, isn't programming too complex to be contained in one tool?

And I would say to you, Yes!, programming would be too complex to be bundled into one thing, because programming isn't one thing, programming is an infinite of things. Though the tools that we'll use, don’t have to be!

Think of pen and paper, They don't ask you if you want to draw, write, list, create tables, or choose a predefined template.

Imagine trying to make a paper airplane and it says "UNSUPPORTED, PLEASE INSTALL ORIGAMI PLUGIN TO TRANSFORM TO PAPER PLANE" or "UPGRADE TO v17.0.5-BETA TO USE THIS FEATURE!"

The pen and paper don't give a darn about what you do with them, you can hang fliers, draw maps, play XO, or make it into a ball and play hoops with your buddies.

All of that complexity is defined by the user, there are no limits, no constraints, the tool though, the tool remains simple, a blank sheet of paper and a pen.

We need to create the pen and paper for programming, an open-ended tool, where young and old, illiterate and literate, beginners and professionals can express their thoughts and creativity without limits.

So how can we create such a tool?

Open-Ended Tools

“open-ended tools are where value comes from. Browsers and spreadsheets are responsible for trillions in value creation. The more Notions and Figmas the more value we’ll generate together.

”

Open-ended tools are the ones where they give you a set of utilities and you define what you want to do with them, the tool never defines the experience, the user creates their own.

An example of open-ended tools are:

Figma: It defines how to draw, not what to draw

Webflow: It defines how to build a site, not what site to build

Notion: It defines blocks to use, not what to write and organize

The browser: It defines how to view websites, not what website to build or go to

Dreams: it defines tools for creating games, not what games to create

Dreams is so underrated, the only problem is that it's a closed ecosystem. Imagine having something like dreams but that can be accessed from the web! Imagine the amount of creativity that can be created and shared!

Minecraft: it defines boxes and tools to use, not what to build with them

Lego: It defines how pieces interact with each other, not what you build with it

And of course, the fundamental open-ended tool, Pen and Paper.

Imagine if there was such a tool for creating software, where people will have power and control over their software. applying Inversion of control to software creation.

The End of The App Era

“Software is trapped in hermetically sealed silos and is rewritten many times over rather than recomposed.

”

Apps are closed gardens, you are bound to the white fences the app developers predefined for you, your data is hoarded and locked behind closed vaults, your ideas can’t go beyond what the app creators could imagine, to get what you need you’ll have to plead your case to the app makers, hoping they’ll add it to their Wishlist, and you’ll wait by the seasons hoping that your wishes will land.

“The App economy is also just a massive waste of human capital—just think about all the wasted time we have collectively spent engineering the same feature again and again for every new App, when we could have been crafting unique commands that users can choose to obtain a la carte.

”

It’s time to end the tyranny of apps, the next era should be led by open-ended tools.

In that era, apps will be unbundled, the apps will be split into:

- Dynamic Interactive blocks: which the user will use to build their software and workflow

- Data: that the user creates and can be shared among workflows, or even aggregated and sold optionally online by the user (statistical data)

- Storage: to store data and workflows whether locally or in the cloud

- Curations: where people can navigate a curated list of blocks, data, and storage to implement a certain workflow (similar to Notion templates or VSCODE extensions but for software)

And in that way, people will be able to create software tailored to their needs, they can even remix other software and workflows to their liking, and they’ll have full ownership over their data because it will live on their storage, not someone else's.

“Blur the line between developers and users. Enable people to modify the systems and tools they use and adapt them to their needs. Crack open the monolithic app model and expose finer-grained components and workflows to be combined in new ways. Apps can still coexist as a delivery mechanism for pre-packaged workflows that can be re-designed and potentially even integrated across different apps.

”

“the restaurant food is truly “better” in many ways, and there’s a role for restaurants in society…But I wouldn’t want to live in a world where no one cooks, and food is something we can only choose off a menu. Software is increasingly heading to that place.

”

“A drawing tool for dynamic pictures should make sharing elements as easy as copy-and-paste. I believe that the evolution of the medium requires such ease of sharing. Artists must be able to build on each other's work.

”

Programming For The Rest of Us

“It's the responsibility of our tools to adapt inaccessible things to our human limitations, to translate into forms we can feel. Microscopes adapt tiny things so they can be seen with our plain old eyes. Tweezers adapt tiny things so they can be manipulated with our plain old fingers. Calculators adapt huge numbers so they can be manipulated with our plain old brain. And I'm imagining a tool that adapts complex situations so they can be seen, experienced, and reasoned about with our plain old brain.

”

In the '80s, before apple made the Macintosh, when you bought a computer you had to learn how to program it. what Apple did was make it such that someone can use a computer without needing to program, it democratized the computer.

Computers have made it easy for the commons to use software, but it didn't make it easy for the commons to build software...yet!

The main problem is that to build software the human needs to speak computer, but what we rather want is for the computer to speak human. And to do that the computer shouldn't just understand when we click a mouse or when we type on a keyboard, it should understand our eye movement, our hand waves, our face emotions, the way we move, the way we speak, the way we understand.

When someone wants to do something with their computer, they'll install an app for it and if they want to add a certain feature they’ll need to request it from the app builders, or they could learn to program themselves to create their own software.

These options are subpar, someone should be able to make the computer do what they want without depending on a company to do their wishes, they shouldn't need to learn the ins and outs of the computer to do so, they should be able to do it without friction.

“Software must be as easy to change as it is to use it.

”

“People should not have to adapt to the tool; the tool needs to adapt to people. If using the tool effectively is only possible if you understand how the tool works, it needs more work until it works more like you think.

”

Why Visual Programming Isn't The Solution

Visual programming isn't really a step up the abstraction ladder, it's more like using a stamp instead of a pen, it's easier to use but harder to express with.

The problem is that it abstracts the programming language itself (for loops, if conditions, etc) but not how we think, making it a form of a wrapper.

It's like using a programming language with training wheels. eventually, there will come a point where you're better off without it.

So visual programming should go beyond blocks, it should visually represent our thoughts, not represent language syntax.

Damn the Terminal

The terminal is a one-dimensional way to interact with a computer.

Some swear for the terminal, and others swear at the terminal.

If you want to be a "real" programmer, you need to learn how to use the terminal!

But I believe this is way far from the truth, we've just inherited dogma from the 80s.

We don't need a terminal, a terminal is a pen tied to a brick.

To use a terminal you need to learn absurd acronyms and syntax like grep and sudo

and you'll write stuff like

cp file file2 -r folderand

sudo pr --test -freven if you're a veteran terminal hacker, you probably look up what flag to pass to grep from time to time.

and why so?

Why use a tool that's so wretched, where it throws all the rules of readability out the window, where the command to view a file is displayed the same as the one that will erase the entire device, where you navigate your computer line by line, where we lust for an actual UI that we simulate a screen inside a terminal, why use a tool that only lets you use your computer through a peephole?

Yes, a terminal is powerful, it lets us pipe data between programs, it lets us do powerful commands with a few keystrokes, it lets us use the whole computer without touching the mouse, it can make us way more efficient.

But have you thought about why is it powerful? it's not because of the terminal itself, it's because our brightest minds (and laziest 👀) have been hacking on it for decades until they made it powerful, they implemented terminal programs that helped them in their day to day work, they automated most of their workflows, they've built tools to share their tools, they've built a whole ecosystem exclusive to them.

Imagine if all that effort has been put into something more...human?

I hope for a world where all the power of the terminal remains, but without the terminal itself.

Where the commons can easily use the same tools as our greatest hackers.

Programming in The Pit of Success

“Make mistakes impossible (e.g. no syntax or type errors) or easy to revert (e.g. undo and redo, history graphs, time travel debugging). Move technicalities and implementation details (e.g. memory management) out of the way, but keep them accessible. Treat failure states as teaching opportunities.

”

When a person tries to sketch up a new idea, they might do some sketches on paper, find out what could be adjusted then iterate, the problem with software tooling is that the moment you make a mistake, the screen screams at you in bright red how wrong you were, it breaks the flow, it causes friction, it demotivates the person by constantly shoving in their face their mistake.

When you make a drawing, you might have got the perspective wrong, the lines could've been thinner, the shading isn't realistic, but yet the drawing still "works".

When you cook, if you add too much salt, the pot doesn't explode. you just end up with a salty soup and you learn not to put that much salt next time.

A kid can write, a kid can draw, a kid can cook, but a kid would not like to code if the code keeps shouting at him.

And it would be a discretion to say that programming is only for adults or for the elites who know the ins and out of computers, Code is an extension of how we think, and all of us think.

It's like saying writing should only be taught to adults because kids can't write research papers, annual reports, books, articles, and whatnot. Kids can write, just not the same things that adults do, they write letters, stories, lists, games.

So software too should be forgiving, have empathy to tolerate human mistakes, and allow the user not to fall into them.

That's why things like syntax errors should not exist, the tool itself should not have an error state, don't allow the user to use a wrong syntax in the beginning (the same way a block-based visual programming language doesn't have a syntax error), the only errors that should remain are logical errors.

Programming is The New Literacy

First, there was language, then writing, then there is programming.

These are the levels of human literacy, with each level, we were able to express what we could never do with the level before it.

In the early ages of any level of literacy, there are only a few who could wield it, but with time, the few turns to most until it becomes the norm.

And the same as normally spoken languages, there will be programmers that are illiterate about the grammar and syntax of the programming language, yet they’re fluent, just like there are language speakers that can give talks, speeches, and even write books without knowing the underlying syntax and grammar of the language they're using.

And how there are spoken language teachers explaining the intricacies of each language while they themselves have not used the language in their day-to-day lifes.

And just like we teach our kids the ones and twos (which was one day only exclusives to the elites of society), we'll teach them the ifs and whiles, and just like how kids are playing with numbered cubes to do math, we'll see kids on the playground playing with tools that visualize their thoughts.

The Power of Explorable and Experimental Feedback

“Encourage exploration and experiments. Don’t expect people to already know what they want or need, let them discover through playful exploration. Creation is discovery.

”

Creators don't always know what they're creating beforehand, artists don't know the drawing they'll draw before it's finished, writers don't know how the sentence will end until they write the last word.

There is something magical about letting creativity flow into the thing we create, but it feels like our current tools have closed that flow shut. They require us to give them exact commands on what to do, they turned creation into something that is too...mechanical.

Our tools should encourage serendipity or should I rather say happy accidents 😁

“Many breakthroughs were accidents, discovered along a path that was supposed to lead somewhere else. That’s why creators need immediate connection with what they are creating and a tight feedback loop that enables them to see changes without delay.

”

Direct Manipulation, and The Inhumane Interface

Imagine if you're molding clay, but you're not allowed to mold it directly.

There is a machine that you write commands to, and it molds the clay for you, how fun would molding the clay be?

I guess it won't be as fun, why? Because we gave something up, we gave up using our intuition and our hand muscles to express the curves and molds we want. we gave up seeing the direct feedback from the pressure we apply with our fingers. instead, we changed it with an analytical and mechanical tool, where you need to think what the output should be before you "input" the command.

We gave up expressing our intuitive creativity.

Something that is so lacking in our software tools, where the thing you manipulate is on a different screen from where you manipulate it.

“Modifying a system should happen in the context of use, rather than through some separate development toolchain and skill set

”

The closest software we have to direct manipulation are 3D design tools.

The Inhumane Interface

We interact with our devices by pushing keys on a board, and pressing, swiping, pinching on glass.

Our interfaces rely on a very minute part of our human dexterity, while we are capable of so much more.

Collaborative Programming

“This is what it means to do knowledge work nowadays. This is what it means to be a thinker. It means sitting and working with symbols on a tiny rectangle... This style of knowledge work, this lifestyle is inhumane.

”

Programming is mostly an individual endeavor, and that's a massive shame.

The things that are considered collaborative about programming are the things around it like discussing issues or reviewing code, but the creation itself is isolating (other than for pair programming but it’s rather hard to have more than two people in a pair session).

Imagine a classroom where each group of students is assigned a task, you as a teacher can see from a glance the state of each group, you can see if someone is not engaged, or if a group is stuck, and within the group itself, people can easily share things, they can do things collaboratively, there is an open space for collaboration.

while when programming, we're using a computer that is meant to be used by one person, and for someone to know you're stuck, you need to alert them then you need to catch them up to your thought process, then maybe they can give you a pointer or take over and solve it for you.

We need to enable people to program together more fluidly, to enable collaborative thoughts, we need to utilize the collective intelligence of the people instead of being siloed in their box, we need to allow authoring casually and socially.

“To improve our collective ability to solve the world’s problems, we must harness the immense promise and power of technology.

”

The Transition Between Abstractions

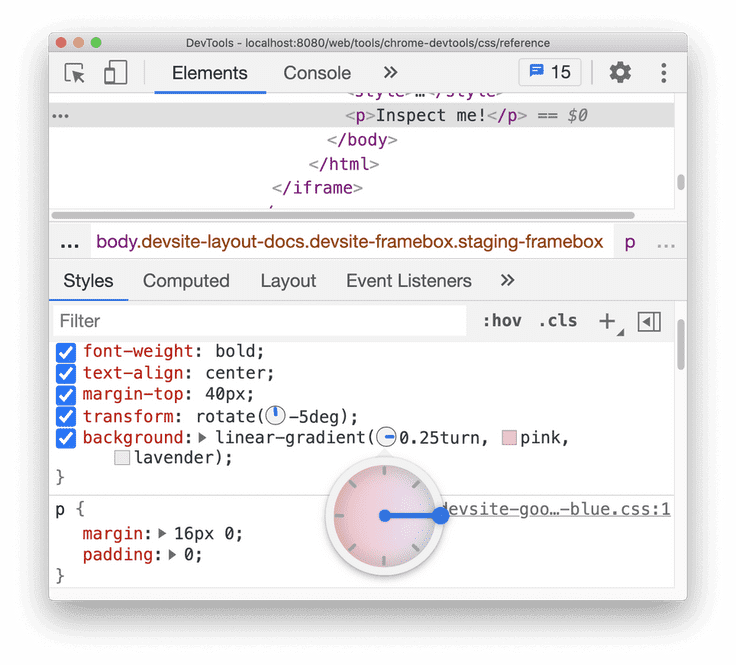

What's better for development, a visual interface or a textual one? I think the question itself isn’t the right one to ask, We don't primarily think in one mode.

Like when molding clay, to make the whole object you rotate and shape it with your hands.

When you want to add some precision you use your nails or a specific tool.

The same is for drawing, sometimes you paint from the wrist for precision and sometimes you do from your arm for smoother lines. you use different brushes, different hand strokes, erasers, and rulers. All these require different muscles, all these require different modes of thinking.

We don't think in one way, some things are better represented visually and some are better represented textually.

It's like saying what's the best chart to represent some data.

There is no "best" chart, there is only a "best" chart that fits some use-case, and the best chart is commonly in line with our human intuition.

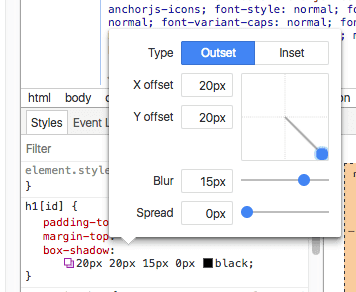

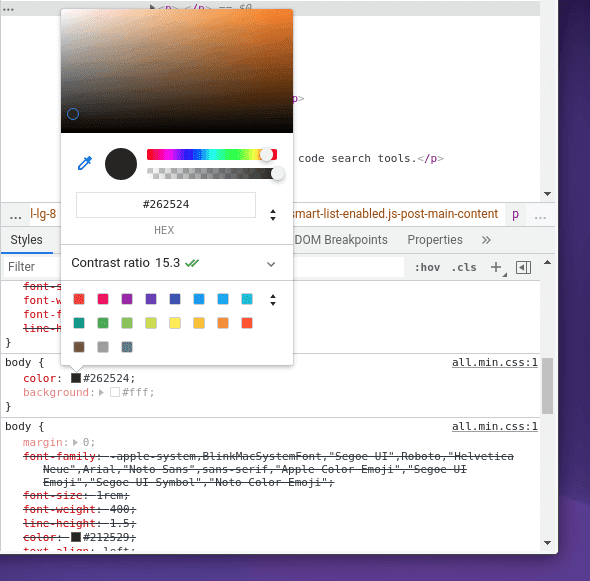

An intuitive way to visualize color is with a color wheel.

An intuitive way to manipulate numbers is with a slider.

An intuitive way to visualize states is with state machines.

An intuitive way to visualize an algebraic function is with a graph.

An intuitive way to visualize a geographic location is a map.

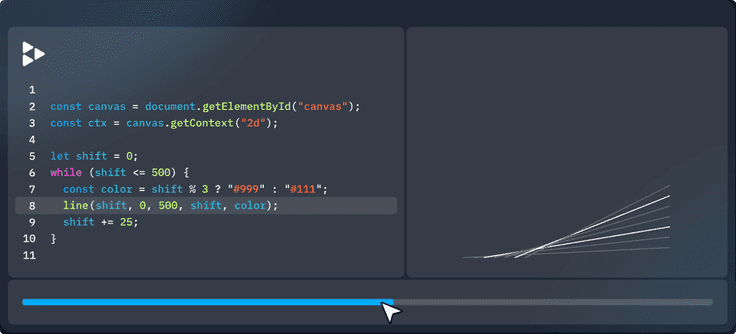

An intuitive way to visualize iterations is to be able to see their transition through time and space.

But our environments represent all of that in text, quotes ", and brackets {…how awful.

Our environment and programming languages should support visualizing and moving between these "modes" of thinking.

The closer the tools represent our thoughts, the more thoughts we can represent with our tools.

Developers spend most of their time figuring systems out.

Why so?

Because we have to read a lot of text and try to compile & run it in our head to figure it out.

There shouldn't be a need for our brain to parse, load, and simulate concepts written in code, because they'll be visually represented in front of us, which leaves space and energy for our brain to think about more important things.

That’s why there won't be "no-code" tools, there will be code tools, just not solely textual.

The reason that most programming is in text is that it's cheap to write. visual abstraction of our thoughts is costly to make. so to build the future of IDEs, programming, and design, we'll have to make visual abstractions cheap to make.

The IDEs of the Future

So how would an IDE of the future look like? how will people build the software of the future?

I would imagine that developing apps would feel like sketching on paper or playing with play-dough, the tool will gradually disappear until there is nothing between you and the thing you want to build.

The best IDE is the one that would augment our thinking, not us augmenting ourselves to the IDE, an IDE that would let us transition between abstractions.

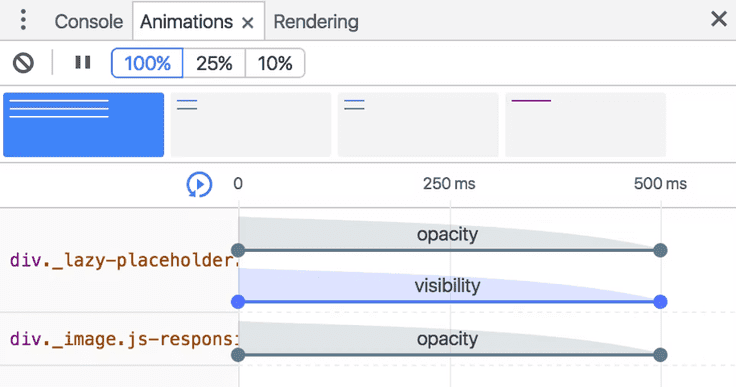

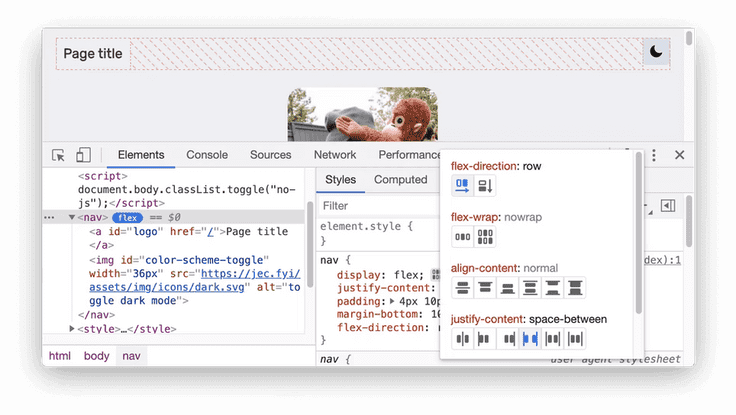

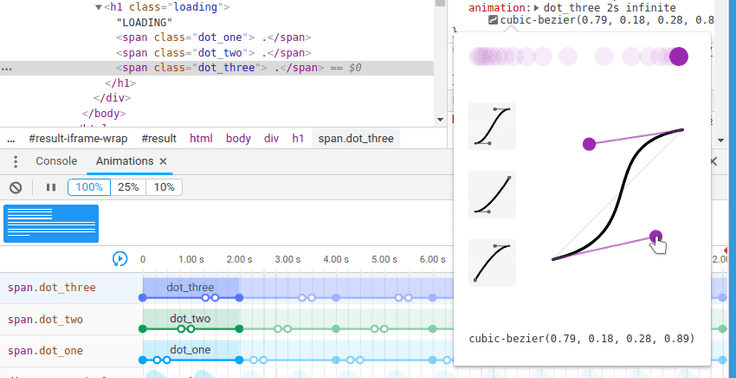

You could already see glimpses of that in our current tooling...

“Leave the limits of text-based editing behind. Design and build complex systems by exploring and manipulating visual, interactive representations of structure and behavior in real-time with immediate feedback. Instead of simulating algorithmic computation in our heads, leverage our unique abilities of embodied cognition.

”

Redefining The Browser & The OS

“The first web browser was also an editor. The idea being that not only could everyone read content on the web, but they could also help create it. It was to be a collaborative space for everyone

”

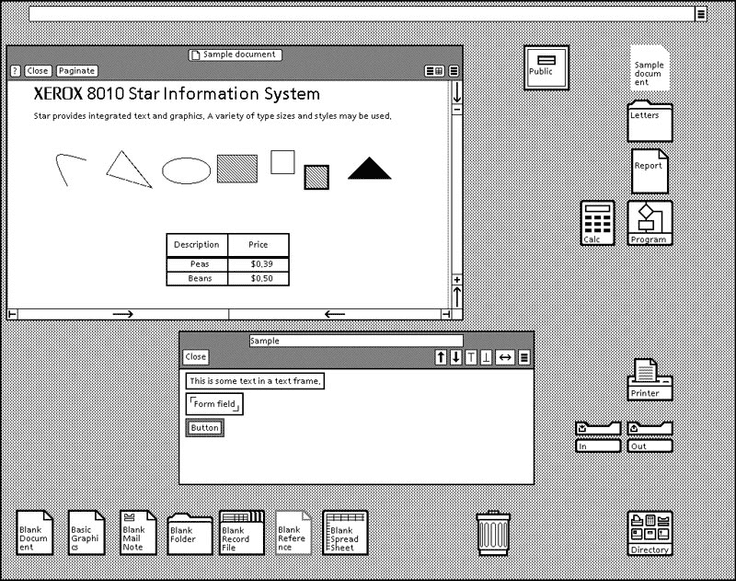

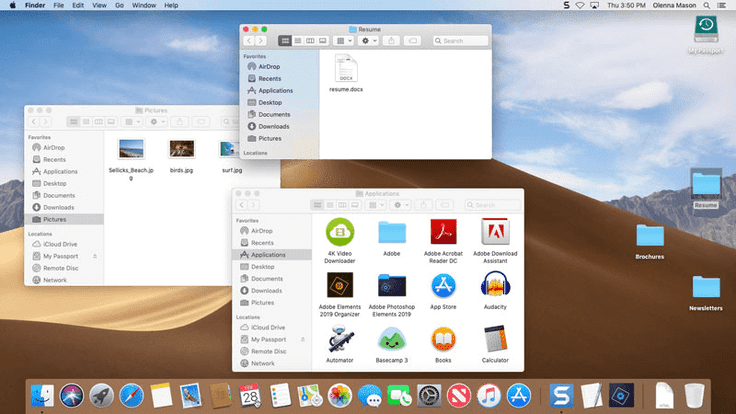

Here's how desktops looked in the 1980s.

Here's how desktops look now.

Slightly different, but kind of the same.

There is a stagnation in innovation in the desktop space, It follows the same paradigms that were needed for the ‘80s.

The OS needs to be reinvented, not just to add a fresh coat of paint, it needs to be built from the ground up, incorporating all the needs of the present, and learning from the sins of the past.

Playgrounds and Workflows Not Windows and Apps

Today we have each application “windowed” in its own box, confined to its set boundaries, never to touch the spaces around it.

The operating system treats applications as a closed box.

When we want to do something with our computer, we think of a task to do, not an application to open, the operating system needs to reflect that.

The task could be done with one application or multiple, so what we need is a playground, a space where we can gather our tools and use them together to do the work, not jump around between multiple applications and windows to do our workflow.

Merge The Browser With The OS

Most of the things we do now on the computer happen on the browser, it now serves as an extension to our operating system. but yet, it’s confined. it’s treated the same as other applications, it’s windowed in its own space while it should be integrated to the fabric of our OS.

Decouple the OS

You probably have multiple devices, each with its own operating system, but they all have one thing in common, you!

Your data, your applications, your settings are all scattered across your devices, each device living in its own bubble.

But why should that be the case?

The devices are just an interface, an operating system should not be tightly coupled to a certain device, it should be a thing of its own, where you can easily switch devices without worrying about setting it up all over again, where your devices have access to the same data and there is no need to transfer things between them with circumventing applications.

Some companies that are pushing the way forward for browsers and operating systems are MercuryOS, The Browser Company, and MakeSpace.

The Future of Learning

Academia has taught us symbols and abstractions, but they didn’t tell us how the scientist and inventors came to those symbols and abstractions in the first place.

They didn't arrive at their conclusions with the same tools we have today, the symbols were merely the result of their work, not the work itself.

The education system has taught us to memorize the work of our greatest minds but taught us so little about developing such a mind for our own.

We learned in a mostly static environment, where we merely observed the subject. the future of learning will have us being able to interact and explore the material, transitioning between abstractions and tinkering with simulations until we’ve built our intuitive understanding of the subject.

“The dirty little secret is that the greatest mathematicians don’t actually think in symbols, Einstein himself said that he “did his thing” with “sensations of a kinesthetic or muscular type.” Sure, e=mc² is an equation — a gloriously elegant and simple one. But the point is that the equation isn’t the math; it’s not the insight, the creativity, that actually happened inside Einstein’s head.

”

The Computer as a Pair Thinker

The computer has been a good servant for decades, we think, give the command, then the computer executes.

The computer has always been good at executing, but when it came to thinking, you were left on your own.

But that shouldn't be the case for long, the computer is going from being a servant to a pair thinker.

You'll be able to think of a program and the computer will suggest ideas on how to build it and may even suggest ideas that you weren't even thinking of!

Let's say you're building a chair, instead of manually designing the shape of the chair, its materials, or its colors, you'd simply think of a chair, and say you want it to be more light-weight, a bit old fashioned, more natural, make it support heavy loads, taller, optimized for long sittings, make it more premium, slightly lower the manufacturing cost, and the system will automatically generate a chair with each iteration.

You'll simply declare what you want, instead of imperatively doing what you want.

Where you set the constraints and the tool generates the variations.

Where the computers eliminate the time required to make design decisions.

“Describe what instead of how; prefer declarative over imperative ways to describe aspects of a system. Turn the how into an implementation detail. Leave extension points for different implementations.

”

At the forefront of making the computer a pair thinker is OpenAI.

Some of the examples of "pair thinking" is:

- Debuild: generate functional web apps from a simple English description

- Autodesk Dreamcatcher: Generate CAD models from goals and constraints

- Looka: Generate logos by describing your company

- Lobe.ai: Train AI models with pictures

- Any many more to come!

Engineering with the Brain

Developing and designing is essentially about translating ideas from your mind to something tangible. But there is a lot of friction from translating the thoughts from your mind to your finger keystrokes to text that the computer understands.

Your thought bandwidth is absurdly faster than writing code.

Let's say you want to draw a smiley face.

In your brain, you can draw that instantly.

You can also draw it fairly fast by hand.

Or you could draw it with a mouse with a drawing tool.

Now try drawing that with code...you'll need to learn a canvas API, then pick a filling color, call the draw a circle function, pass it the right parameters for the size and position, then do that again for the circles for the eyes, then draw and position an arc for the mouth, then you have a smiley face 🙂.

As you can see it has a lot of friction and it's very mechanical.

Coding by typing text is not the endgame, coding with thought is the endgame.

First, we programmed by punching cards, then we programmed by typing on keys, then we program with movement, then we program with thought. where we'll be able to design as fast as we think.

And to make that happen we need to design an interface for our brain, and that's exactly what Neuralink is aiming to do.

The Next Revolution Isn't a Product, But a Medium

We've come to expect that the "Next Big Thing" would come to us on store shelves, or an install, update, or a URL away.

But I don't think the "Next Big Thing" will be so, the "Next Big Thing" will not be a product you'll hold in your hand, it won't be an app you double click or tap on, it won't be a website you visit, it will be a thing that's integrated everywhere, the way that electricity is integrated everywhere.

A century and some time ago, there were no light bulbs, no cables, no switches, no electricity, nothing to power on or off. Until some thinkers and tinkerers discovered the power of electricity.

But the world didn't change at the moment of discovery, it changed gradually. once there was one light bulb, then there were billions.

And now cables and electricity flow in your hand, your desk, around the house, the streets, beneath the grounds and countries crossing oceans and borders, even floating around in earth's orbit. and that took decades and a whole century.

Electricity is not a product, it's a medium. Electricity wasn't invented by one person or a company, it was discovered by a series of scientists iterating on each other, no one owns electricity.

You could say the same about the web, the web isn't a product, it's a medium/protocol. It's commonly attributed to one person who invented it, but the web as we know it today was made by all of us. we've shaped it into what it is, no one person or company owns the whole internet.

So I presume that just like there is electricity at homes, and lights in the streets, water pipes running in sewers, gas being pumped to our heaters, so too will computers and software run into our physical world. where we won't interact with it through a limited 4-inch digital brick you carry in your pockets, but we'll be able to interact with it through the world around us, where the technology will look invisible, but its presence is felt everywhere.

The Future is a User Interface Problem

The future of computers has always been a user interface problem, it's the GUI of the operating system, the mouse, the keyboard that made the computer accessible to so many people.

The same with smartphones where the touch screen and the mobile-friendly UI made it easy for mass adoption.

So to invent the future, you need to invent its user interface.

But the thing that people tend to focus on is the device that we’ll access the interface with, it doesn't matter as much if the future interface is through VR headsets, eyeglasses, contact lenses, chips in the brain, or big projectors in the sky, they're all just implementation details.

The Imagined Future of Engineering

When we imagine inventors of the future, we don't imagine them ticking on a keyboard in front of a screen typing inside a text editor, we imagine them doing...

Direct Manipulation

They interact with their inventions directly and physically, not through a 24-inch rectangle screen.

The Computer as a Pair Thinker & Declarative Executer

They built computers that think with and for them, the computers can take commands declaratively instead of the inventors having to do the things themselves imperatively.

Visualized Abstractions

The abstractions they use aren't wholly text, they're visualized to the thing that is suitable for the inventor to easily understand and comprehend.

Interoperability Between Interfaces

The objects and abstractions they use are interoperable between interfaces, being visualized appropriability based on its context.

Organizing Information in Space

Information of objects aren't scattered in multiple files next to each other being shown on a screen but rather are placed inside a space where you can move between them and figure the relationship between each object and the other.

Collaborative Thinking

Having object and abstractions placed out in the open where anybody can see allow for collaborative thinking and figuring out the state of your collaborator from a glance.

The Slow Ascend to The Open Garden (The Technology Revolution is Yet to Come)

100 years ago, no one could’ve predicted how the future would look like, we only imagined what it could be.

100 years from now, no one now would be able to predict how the future would look like then, we can only imagine what it can be.

I imagine a future in an open garden, where bits and atoms co-exist in harmony, where all from young and old is able to express creativity and thought fluidly and socially, the same as writing on pen and paper.

Join The Newsletter

Get more content like this right in your inbox!